Troubleshooting¶

Overview¶

A render farm can be a complex system which can make diagnosing and resolving problems in the render pipeline difficult. This section should help handle many of the common issues that may occur during the usage of a Deadline-based render farm.

Isolating Render Errors from Deadline¶

The most difficult problems to diagnose tend to happen within the rendering process itself. It is usually easier to find the root of the problem by rendering outside of Deadline on the machine where the render failed. Many applications can be diagnosed this way, but there are some limitations on the jobs which can be used to run these type of tests on:

The error must be reproducible with batch mode disabled (Nuke, Maya, Cinema 4D, SoftImage, etc).

Ideally, the scene file was not submitted with the job.

Ideally, path mapping was not needed for the scene.

It is possible to work around these limitations in many cases, but for simplicity it’s best to reproduce the issue while satisfying the above requirements. If there is an existing job which doesn’t meet these requirements, try creating a new job using the same scene file and submission settings which does. For example, to avoid needing to map paths, submit a new job on the same OS type as the machine which generated the original error.

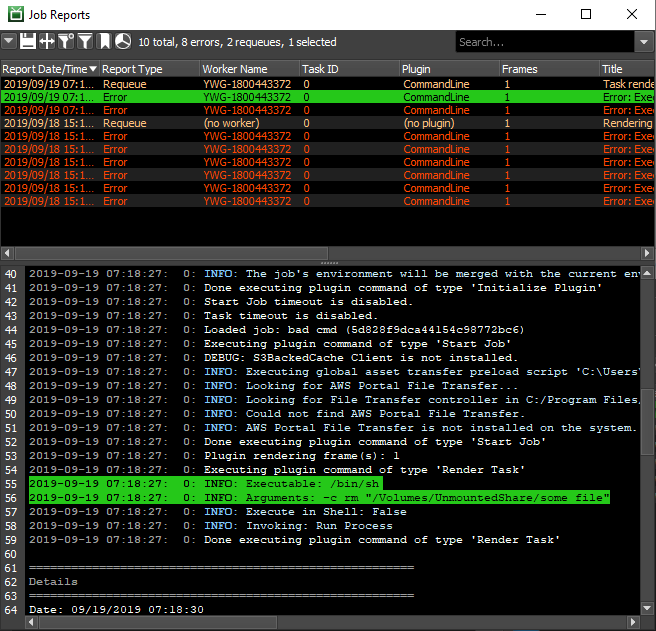

Once a job has been submitted and has generated the error you would like to resolve, view the job reports for the render and find which machines generated problems. Take note of below items from the reports:

Which machine(s) threw the error.

What the “Full Command:” line says about which program was run and what arguments were passed and if “Full Command” cannot be found then

What the “Render Executable:” line says about the program which was run.

What the “Render Arguments:” line says about the options given to that program.

With this information, we have everything we need to test the render manually. Here is a synthetic example:

We can find the machine that threw the error in the bottom of the log, and the full command or command and arguments to use on the command line are:

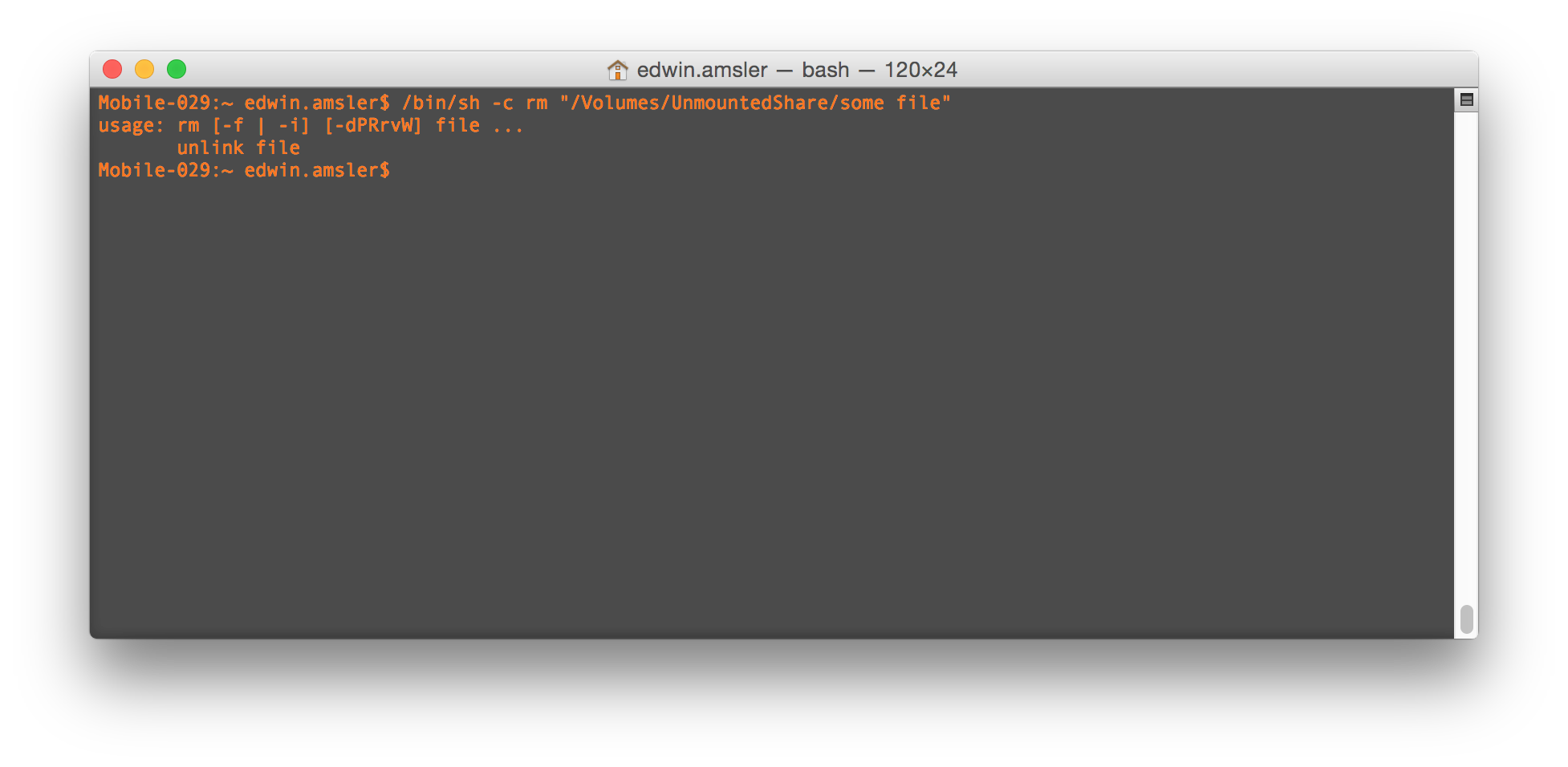

/bin/sh -c rm "/Volumes/UnmountedShare/some file"

So, the next step is to actually run the command from the Command Prompt, Terminal, or shell as applicable:

You can see here that the output on the command line was the same as we saw within the STDOUT lines from the log above. That means we have the starting point we need to start figuring out the problem. If Deadline complained about an exit code in the log, you can run echo %ERRORLEVEL% on Windows or echo $? on macOS and Linux to see what exit number the program returned when it closed. In general, any exit code other than zero denotes an error.

There are some steps you can take now that you’ve run the render externally. For example, if the program ran properly when run from the command line, it is likely a problem with environment variables not yet being set. As a test, restart the rendering machine and requeue the job within Deadline to see if the problem has cleared. Another example might be that if the error was due to a missing plugin; you can install that plugin and re-run the render command manually to see if the error has cleared.

You should now have everything you need to start fighting the root cause of the problem without the overhead of having to interact with the Monitor for testing. If you need additional help, feel free to ask your reseller or send an e-mail to support@thinkboxsoftware.com. Please ensure you save and send the job report when you contact the Thinkbox Software Support team.